The Manipulation of Decisions

Facebook Could Decide Who You Vote For

In 2016, the New York Times ran an article about how Target uses big data and analytics to determine if a shopper was pregnant — before they wanted anyone to know. Andrew Pole, one of Target’s statisticians, stated, “We knew that if we could identify them in their second trimester, there’s a good chance we could capture them for years.” This is the world we live in today, the world of big data. There are thousands of data points that are used — due to the sheer size of our digital footprint — to manipulate the user toward a certain goal. This is certainly not necessarily nefarious but just the natural projectile of an increasingly scientific approach to marketing.

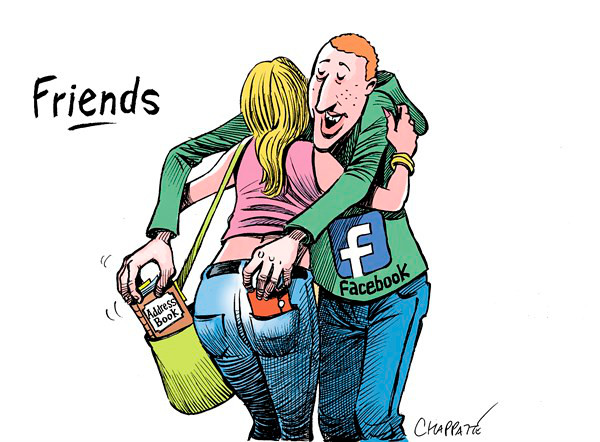

On April 10, 2018, Facebook CEO Mark Zuckerberg uncharacteristically donned a suit and testified before Congress regarding the Cambridge Analytica data breach, wherein data collected from 87 million users was shared to the firm. Subconsciously we all know that corporations have marketing strategies to guide us in the directions they want. But with Facebook it’s different. Facebook is unassuming because it is seemingly a free service. But nothing is free in this world; the real value is mined through the collected user data. Facebook sells you, the user, or at least access to you. As the old saying goes, “If you’re not paying, you’re the product”.

Facebook’s News Feed curates articles and posts that are specifically tailored to the individual, with the goal of maximizing the time spent on the site. As most people are conflict-averse, this means your Feed slowly starts mirroring your political and religious views. And suddenly, the conversation becomes one-sided, and the user is insulated from opposing viewpoints. This is a dangerous byproduct of Facebook’s incredibly effective marketing, because engaging in real conversation is pinnacle to democracy.

However, Zuckerberg did not testify because of this phenomenon or Facebook’s contribution to an increasingly polarized political arena — though the Russian “fake news” controversy was certainly a subject. Zuckerberg was forced to address Congress because Facebook has a fiduciary responsibility to protect this information. When I go to the movie theater and buy a Coke because the sizzling, carbonated sound of the Coca-Cola commercial makes my mouth water, I recognize that this is a quality marketing solution manipulating my senses. If I read a Facebook news article claiming a scandal in the Clinton Foundation, I may not recognize this as being a politically-motivated marketing move.

With the data collected, Cambridge Analytica created psychological and political profiles, and created Facebook ads and social media marketing campaigns that targeted individuals based on their psychological disposition. Robert Mueller, special council for the U.S. Department of Justice, has referred to this as “information warfare.” Though the extent to which this affected the outcome of the 2016 election is not known, it is clear that millions of voters were systematically manipulated. It is the duty of those who collect this information to safeguard it, because we are tremendously susceptible to corporate and political manipulation.

The question to ask is: If Target can know that you’re pregnant through your purchasing trends and successfully lead you toward certain purchases, what does Facebook know about you through your social-media interactions, and how could this data be used to manipulate your future decisions? As we wrestle with the unintended repercussions of emerging technology, it is of utmost importance that we are protected from corporate and political manipulation. Surely a company that is solely funded by advertising campaigns should not be wholly trusted to determine how and what user data is protected.